The Data Science Accelerator - now in its third year - is a capability-building programme which gives analysts from across the public sector the opportunity to develop their data science skills by delivering a project of real business value. The Accelerator runs every four months and applications for the next round open at the start of May 2017.

We wanted to share what our participants have worked on during the programme and how they’ve applied their data science skills. Here’s an update from our recently graduated 7th Accelerator cohort.

Predicting public order and violent offences on the London transport network

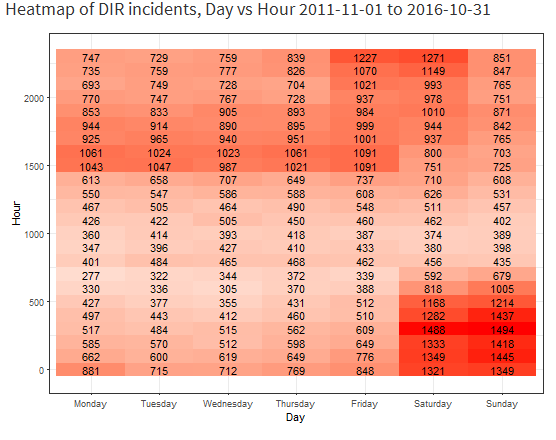

Kerry Cella from Transport for London (TfL) aimed to predict public order on the public transport network to inform police deployment. Kerry conducted data exploration into driver incident reports to validate prior anecdotal knowledge and built an interactive visualisation using Shiny to display temporal trends in incidents.

Kerry then used techniques for calculating kernel density estimation on a network to determine more realistic incident hotspots than had previously been possible using Euclidean methods and looked at the factors that might be driving them. Back at TfL, Kerry plans to add more features and predictors to the model, and create an interactive application to be used by operational staff.

Identifying and assessing interdisciplinary research

Robin Simpson from the Department for Business, Energy & Industrial Strategy (BEIS) looked at how interdisciplinary research (IDR) - which integrates data and techniques from across disciplines - can help address complex global issues such as climate change. The level of IDR being undertaken in the UK is not clear so Robin investigated how to identify the differences between IDR and standard research. Using a random forest model, he was able to predict with an accuracy of 70% whether a new piece of research would be classified as interdisciplinary.

This kind of analysis helps us to better understand the current research landscape, particularly for vital cross-cutting work which is difficult to classify using traditional subject areas.

Prioritising care home inspections

An Nguyen from the Care Quality Commission set out to address the discrepancy between actual and self-reported performance in care homes, and how this data is used in risk indicators which flag whether a care home should be inspected. After trying various machine learning methods, An settled on using Adaptive Boosted Trees to look at re-inspection ratings and predict which care homes are likely to get worse over time.

This model has improved the accuracy of ratings and allows for a focus on re-inspection of the highest risk homes. The next challenge will be to engage stakeholders, including inspectors, in formally testing and adopting the model.

Directing Parliamentary Question Requests

Tamsin Forbes from Department for Education (DfE) explored ways to automate the allocation of Parliamentary Questions (PQs). PQs are used by MPs to seek information from Ministers on the actions and decisions of their Departments.

Currently PQs are manually allocated to teams based on their identified subject. This is a time consuming process with a short turnaround time, which would greatly benefit from automation. PQs are entirely text based, so Tamsin used natural language processing and generated a term document matrix to numerically represent the text. She then used a random forest model to classify the text into the most frequent subjects, and is continuing the project with the aim of improving the accuracy of the classification.

Employer Covenant assessment modelling

Sam Blundy from The Pensions Regulator modelled the very resource intensive, largely manual process of assessing the health of sponsoring employers relative to their pension scheme liabilities (the “Employer Covenant”). This is a timely project in the context of a £500bn shortfall in Defined Benefit pensions scheme funding, and high profile cases such as the collapse of BHS and its associated pension fund.

Using a random forest prediction model in R, Sam was able to estimate covenant strength with over 60% accuracy against 10,000+ historic assessments. The model is deployable in its current form and has the potential to be used for real time risk assessment given the speed and breadth of its applicability relative to the existing manual process.

Patient journey networks

James Crosbie from NHS England sought to develop an interactive online tool to navigate the complicated flow of patients through the NHS system. The web app, built in D3, explores pathways and visualises specific patient journeys. It can be filtered by factors such as date, cost of pathway, gender of patient and similar.

The tool has been presented to senior analytical leads at Department of Health, and next steps will include detecting cost-related patterns.

Reproducible analytical pipeline

Laura Selby from DfE looked at tools and techniques for simplifying the process of producing statistical reports for the Department. The current semi-manual process is slow and complicated, and the end product - commonly a PDF - doesn’t always meet user needs. Laura aimed to use a new approach, using tidyverse in R to produce a high quality end product in the form of an HTML document (for external audiences) and a Shiny dashboard (for internal audiences).

This project has provided DfE with a functioning example of the use of automation and reproducibility. Next steps include discussions with GOV.UK on how this method could be used for HTML publication.

Automation of object detection from satellite imagery

Catherine Seale attended from UK Hydrographic Office (UKHO). The UKHO is a global marine geospatial information provider, and the charting authority for over 80 countries. The aim of this project was to improve knowledge of offshore infrastructure worldwide (such as oil rigs and wind turbines), to reduce the risk of collisions (and associated human and environmental disasters), and to automate the currently manual data capture process.

Using synthetic aperture radar imagery, Catherine applied image processing and computer vision techniques in Python to process open-source satellite imagery.

The outcome of the project is a working tool which successfully detects marine objects, and she is taking it further by using machine learning to add a classifier to label the detected objects.

If you would like to know more about the Accelerator please email us.

1 comment

Comment by Tony Hirst posted on

Is there a github repo anywhere where you collect examples of code produced as part of the Data Science Accelerator program?