Over the last year, the Government Data Science Partnership has been developing a Framework for Data Science Ethics. The framework seeks to help policy makers and data scientists maximise the potential for data science within government, whilst navigating the legal and ethical issues that accompany new forms of data analysis. Ipsos MORI were set the challenging task of engaging with the public and understanding their views on how government should use data science. The findings will be explored by data scientists, analysts, policymakers and external experts, and used to improve the first version of the ethical framework, launched today.

We anticipated that public awareness of data science would be low, and yet somehow had to get to a point at which we could discuss the finer details of ethics in data science, such as data quality, consent, and how much human oversight there should be. To do this we required a three pronged approach: a dialogue through deliberative workshops; conjoint analysis; and an online engagement tool.

A dialogue approach

We spoke to 88 people across four locations as part of a series of deliberative public workshops. Given the complexity of the topic, we ran reconvened workshops across two days. On day one, we gradually introduced new information and ideas about how data science works to bring everyone up to the same level of understanding. This included stripping data science back to its core components – exploring how data is generated, how this can be aggregated into data sets, and how this can be analysed. On day two, we could continue the conversation and talk to them about the framework itself.

Piloting workshop materials prior to the main dialogue workshops was crucial. It revealed the preconceptions that people have, and the gaps in understanding that if not filled can restrict detailed debate of opportunities, benefits and risks of data science design. Demonstrating the potential impact of data science through real life case studies was key to engaging the public in a discussion about opportunities for data science. Together with the GDS Partnership, departments and the project’s Advisory Group, we developed a set of real and hypothetical case studies to assess opportunities across a spectrum from the most ethically acceptable to the least ethically acceptable.

Sharing these case studies made participants more willing to assess and comment on the concepts and mechanics of different data science projects. Only at this point could they identify what was and was not appropriate. Without this, measures of public opinion would only capture underlying attitudes to data and government in general.

Another key benefit of workshops is that it brings experts and members of the public together. We were fortunate to have 10 experts on hand across the workshops to clarify and aid discussion – in a separate blog post, two of them reflect on their experiences of the workshops in Taunton. Over the two days of workshops, we were impressed with the maturity of discussion, and the public’s ability to evaluate new and complex concepts. Watch the video to find out more:

Using conjoint analysis to get beneath stated responses

Deliberative workshops are really valuable for discussing issues in depth, but only tell part of the picture. In addition to the workshops, we conducted an online survey with 2,003 adults aged 16-75 in the UK. This gave us both access to a larger pool of people, and an opportunity to gauge opinion on data science among members of the public who had not benefited from two days of deliberation.

Often surveys can just reveal top of mind opinion, but we know with data that people’s opinions are often different from their actions (for example people say they are worried about privacy but don’t necessarily change their privacy settings). A crucial part of the online survey therefore was to get beneath stated response and tap into the subconscious decisions people make. To do this, we conducted a conjoint exercise, which identified the principles that are important to people when faced with different opportunities for data science.

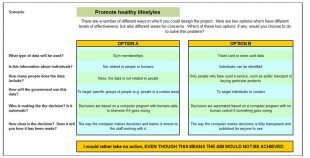

Conjoint analysis is tricky, so bear with this! The conjoint exercise involved asking respondents to imagine themselves as a part of a team in government responsible for solving problems using data science techniques. Respondents were then presented with different scenarios where data science could take place. Within these scenarios, respondents had the option of two different data science projects to choose in order to achieve the objective. They were also given the option to take no action and told that this means the aim would not be achieved if that response is chosen.

Within each option were six different ‘attributes’ – categories of key elements of the project – such as the type of data that would be used. Finally, within each ‘attribute’ were several ‘levels’ that represented a spectrum of the possible options within each attribute – such as personal data, aggregated data or open data. Levels were randomly rotated to ensure that every combination of different scenarios was seen by a substantial proportion of the sample. An example of how this was presented to respondents can be found below.

The results provided insight into how people made decisions about what they thought about how government should use data science. The public make decisions based on both ethics and effectiveness, and that they were willing to trade of some ethical concerns to ensure that policy objectives were fulfilled. Support for data science was conditional and that some scenarios, and some individuals, were more likely to generate an answer of opting for no data science to take place.

Engaging with the public further

One of the objectives of the research was also to reach a wider audience, and engage those outside the research in the ethics of data science. In collaboration with Codelegs, we developed the “Data Dilemmas” online app. Building on the data from the online survey, the app provides a way of learning about data science and the ethical considerations that government has to make in designing data science projects. This includes a quiz that allows the public to build their own data science solution to a scenario, such as developing a project that will help improve careers advice, and find out how their selections compare with the public.

The Data Dilemmas app is available here: https://datadilemmas.co.uk – why not have a go!

Reflections for future engagement

Through both the online survey and the workshops, it was clear that the public welcomed the opportunity to discuss government use of data science, and were broadly reassured by the presence of a framework that policy makers and data scientists can use to make sure what they are doing is ethically appropriate.

It is difficult to try and develop a set of ‘do or don’t’ standards that can be applied universally across all data science projects – the public do not identify opportunities in that way. Instead, they conduct a more nuanced assessment to evaluate the methods against the objectives of the policy. Getting the policy opportunity right is just as crucial as the data science method. To date, much of the debate surrounding use of data science has largely focused on balancing public benefit with concerns about privacy and security; this research has shown that the public are willing and able to take the discussion a step further, and explore how values of fairness, transparency, agency and accountability are upheld within data science.

If you have any questions or feedback about this research, the tool or the government's data science ethical framework, we would welcome all contributions. Please email me or Madeleine Greenhalgh in the government's data team.

Steven Ginnis is Head of Digital Research at the Social Research Institute at Ipsos MORI

Recent Comments